[Paper Review] RoMaP: Robust 3D-Masked Part-level Editing in 3D Gaussian Splatting with Regularized Score Distillation Sampling

Introduction

In this post, I review RoMaP: Robust 3D-Masked Part-level Editing in 3D Gaussian Splatting with Regularized Score Distillation Sampling by Hayeon Kim et al. (Seoul National University), published at ICCV 2025.

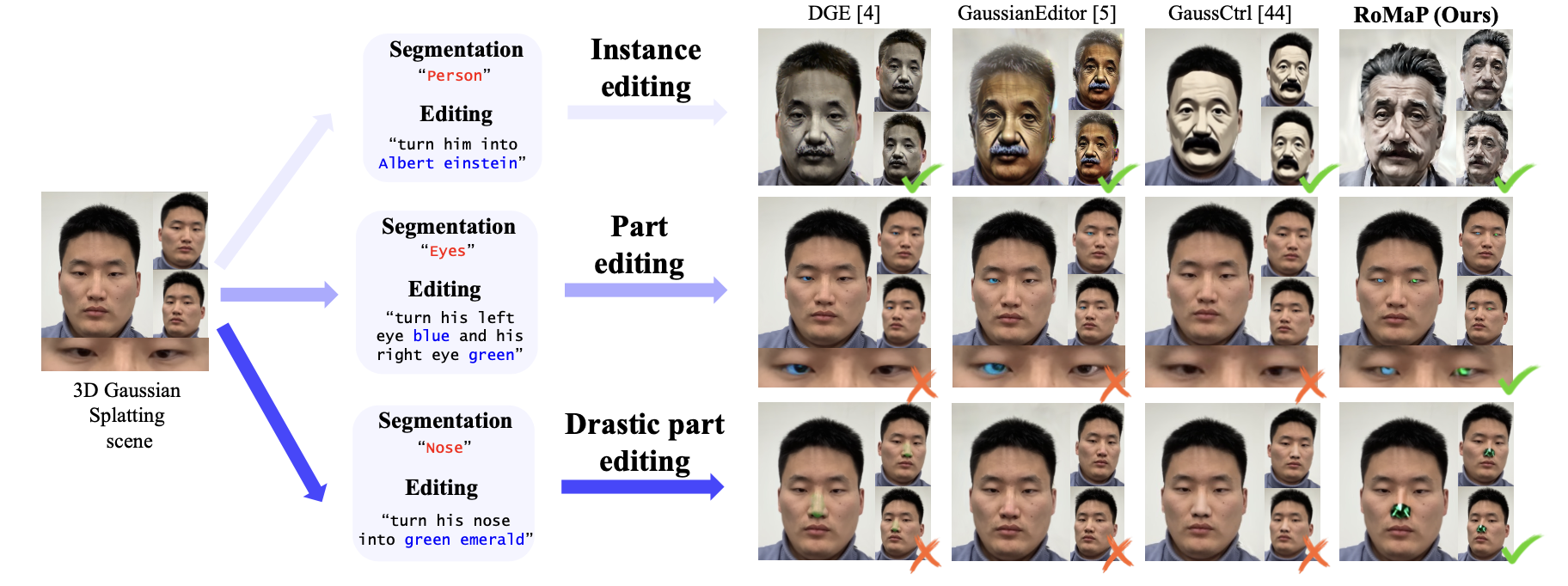

RoMaP tackles one of the most persistent challenges in neural rendering: precise, local 3D editing. While previous methods excel at global style transfer, they struggle to modify specific parts (e.g., “change just the nose”) without bleeding into the background or losing geometric coherence.

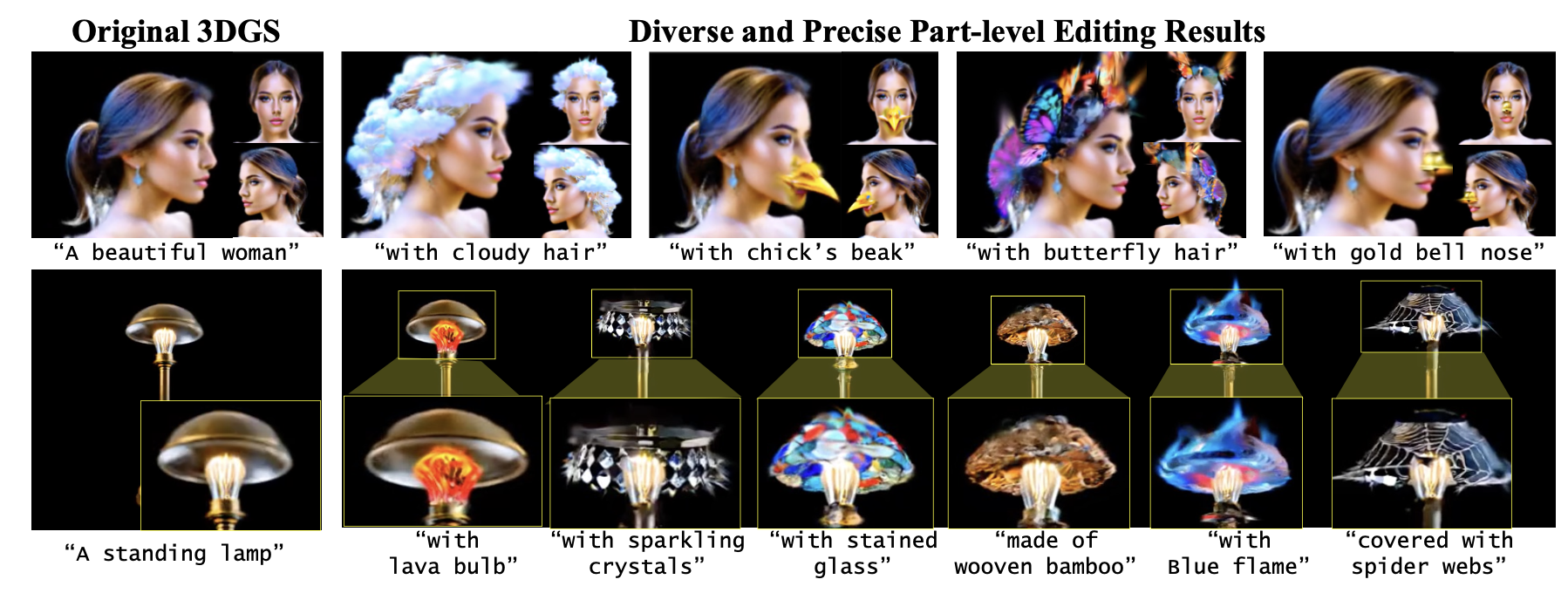

RoMaP solves this by treating segmentation labels as view-dependent spherical harmonics and introducing a regularized editing loss guided by a novel latent mixing strategy. This allows for drastic topological changes—like turning a human face into a goat or replacing hair with jellyfish tentacles—while maintaining perfect background consistency.

Paper Info

- Title: Robust 3D-Masked Part-level Editing in 3D Gaussian Splatting with Regularized Score Distillation Sampling

- Authors: Hayeon Kim, Ji Ha Jang, Se Young Chun

- Affiliations: Seoul National University (SNU)

- Conference: ICCV 2025

- Code: RoMaP (Project Page)

Background: The Challenge of Local 3D Editing

Most existing 3D editing frameworks (e.g., GaussianEditor, GaussCtrl) rely on a “2D-update-then-project” loop:

- Render a 2D view.

- Edit it with a diffusion model (like InstructPix2Pix).

- Project the changes back to 3D.

This approach fails for part-level editing due to inconsistent 2D segmentation. A segmentor like SAM might identify an eye in the frontal view but miss it in the side view. When these conflicting 2D masks are projected into 3D, the result is a noisy, broken mask that leads to artifacts. Furthermore, standard Score Distillation Sampling (SDS) loss is inherently ambiguous and struggles to force drastic geometric changes against strong existing priors.

Problem Definition

The goal is to edit a specific part \(\mathcal{P}\) of a 3D scene represented by a set of Gaussians \(\Omega\), guided by a text prompt \(p_{edit}\), while keeping the rest of the scene \(\Omega \setminus \mathcal{P}\) frozen.

This requires two distinct solutions:

- Robust 3D Masking: Explicitly defining \(\mathcal{P}\) in 3D space despite noisy 2D inputs.

- Targeted Deformation: Forcing the Gaussians in \(\mathcal{P}\) to change geometry/texture drastically (e.g., skin\(\to\) metal) without “fighting” the original appearance.

Architecture Overview

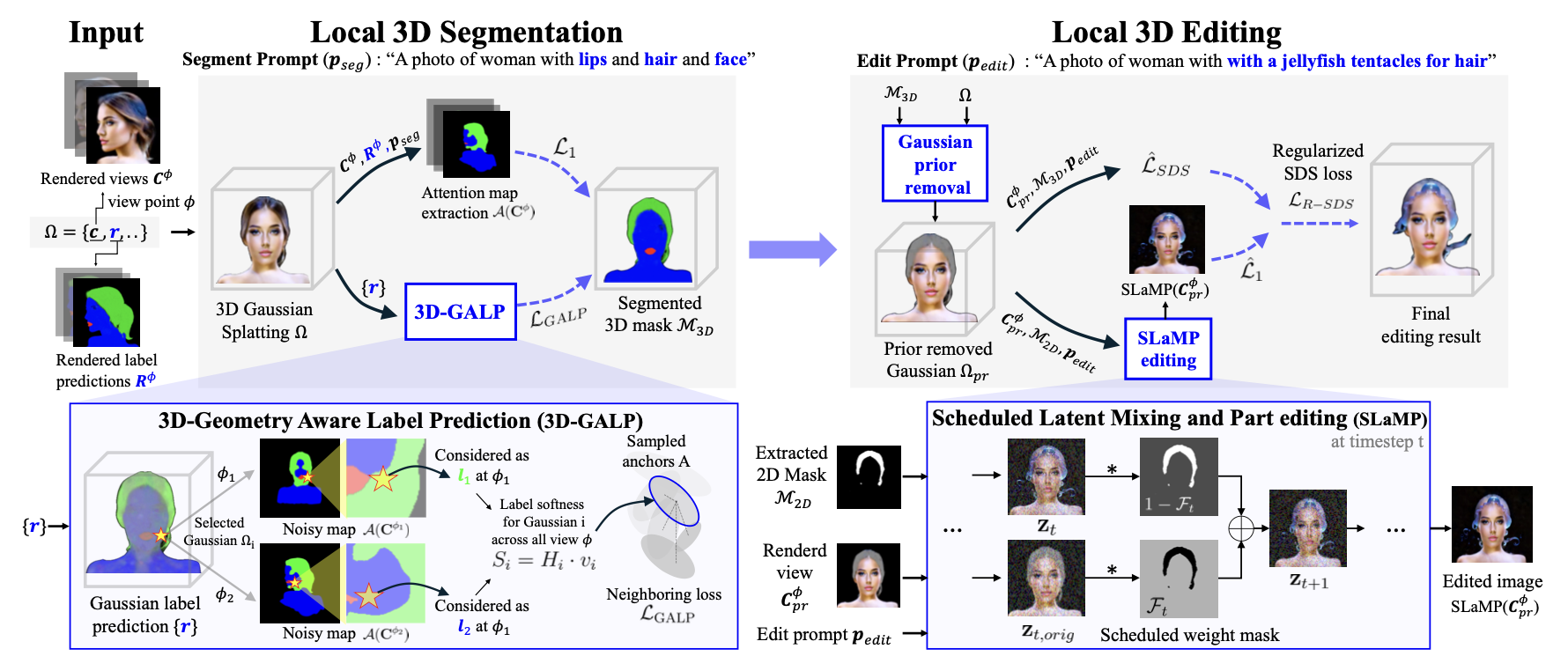

RoMaP proposes a two-stage pipeline: Local 3D Segmentation followed by Local 3D Editing.

- 3D-GALP (Segmentation Phase): Generates a view-consistent 3D mask by modeling labels as spherical harmonics and refining them with neighbor consistency.

- Regularized SDS (Editing Phase): Edits the masked region using a “Prior Removal” strategy and a novel SLaMP (Scheduled Latent Mixing and Part) guidance image to enforce precise changes.

1. 3D-GALP: Geometry-Aware Label Prediction

Standard 3D segmentation assigns a discrete label to each point. However, 3D Gaussians at object boundaries are “semi-transparent” and “view-dependent” a Gaussian at the edge of a nose might belong to the “nose” in one view and the “cheek” in another.

RoMaP addresses this by augmenting each Gaussian \(\Omega_i\) with a learnable label parameter \(\mathbf{r}_i\), modeled as Spherical Harmonics (SH) coefficients (just like color):

\[\Omega_i = \{p_i, s_i, q_i, \alpha_i, c_i, \mathbf{r}_i\}\]Key Innovation: Label Softness The model computes a “softness” score \(S_i\) for every Gaussian based on the variance of its rendered label across views (\(v_i\)) and its entropy (\(H_i\)):

\[S_i = H_i \cdot v_i\]- High \(S_i\): The Gaussian is at a boundary (ambiguous).

- Low \(S_i\): The Gaussian is deeply inside a part (confident).

Using this score, RoMaP samples Anchors (highly confident points) and applies an Anchor-based Neighboring Loss:

\[\mathcal{L}_{GALP} = \sum_{i \in \mathcal{A}} \left[ \frac{1}{K} \sum_{k \in \mathcal{N}_K(i)} \| \mathbf{r}_i - \mathbf{r}_k \|_1 \right]\]This mathematically forces boundary Gaussians to align with their confident neighbors, resulting in a crisp, clean 3D mask \(M_{3D}\).

2. SLaMP: Scheduled Latent Mixing for Guidance

To edit the segmented part, RoMaP needs a high-quality 2D reference image to guide the SDS loss. Standard diffusion editing often alters the background or fails to generate the new object structure.

RoMaP introduces SLaMP (Scheduled Latent Mixing and Part editing). It mixes the latents of the original image (\(z_{t, orig}\)) and the target generation (\(z_t\)) using a dynamic schedule:

\[z_{t+1} = z_t(1 - F_t \cdot (1 - M_{2D})) + z_{t, orig} F_t \cdot (1 - M_{2D})\]- \(F_t\)(Mixing Coefficient): This is not constant. It follows a sharp transition schedule at timestep \(t_s\).

- Early steps: The model is free to generate new geometry (low \(F_t\)).

- Late steps: The model is forced to lock onto the original background (high \(F_t\)).

This produces a SLaMP-edited image that has the perfect new part (e.g., a beak instead of a mouth) but retains the exact original background pixels.

3. Regularized Score Distillation Sampling (SDS)

With the 3D Mask \(M_{3D}\) and the SLaMP reference image, RoMaP updates the Gaussians using a Regularized SDS Loss:

\[\hat{\mathcal{L}}_{R-SDS} = \lambda_1 \mathcal{L}_{SDS}(c_\phi^{pr}, p_{edit}) + \lambda_2 \mathcal{L}_1(c_\phi^{pr}, \text{SLaMP}(c_\phi^{pr}))\]Three Critical Components:

- Gaussian Prior Removal: Before computing the loss, the color of the masked Gaussians is set to neutral (e.g., white). This prevents the SDS loss from trying to “preserve” the old texture (like skin color) when generating a new material (like gold).

- Anchored L1 Loss: The second term (\(\mathcal{L}_1\)) forces the rendering to match the SLaMP image pixel-for-pixel, providing the strong geometric guidance that standard SDS lacks.

- Strict Masking: Gradients are strictly blocked for any Gaussian outside \(M_{3D}\), ensuring zero background degradation.

Results Summary

RoMaP was evaluated on both reconstructed real-world scenes (IN2N dataset) and generated assets.

| Method | CLIP Score \(\uparrow\) | Directional CLIP\(\uparrow\) | VQA Score\(\uparrow\) |

|---|---|---|---|

| GaussCtrl | 0.182 | 0.044 | 0.190 |

| GaussianEditor | 0.179 | 0.087 | 0.370 |

| RoMaP (Ours) | 0.205 | 0.277 | 0.723 |

Qualitative Wins:

- Drastic Geometry: Successfully turned a human nose into a “croissant” and a “golden bell,” which baselines failed to do (they just painted the nose yellow).

- Precision: Edited “left eye blue, right eye green” distinctly, whereas baselines blended the colors.

Limitations

- Dependency on SLaMP: The quality of the 3D edit is capped by the quality of the 2D SLaMP generation. If the diffusion model fails to generate the concept in 2D, RoMaP cannot recover it in 3D.

- Static Scenes Only: The current framework focuses on static 3DGS and does not yet account for temporal consistency in 4D (dynamic) Gaussian Splatting.

Takeaways

RoMaP sets a new standard for controlled 3D editing. It moves beyond simple “text-based coloring” to enable structural part replacement.

Key lessons for future research:

- Labels are Signals: Treating discrete labels (segmentation) as continuous signals (Spherical Harmonics) is a powerful way to handle ambiguity in 3D representations.

- Guidance over Optimization: Standard SDS optimization is insufficient for drastic changes; providing an explicit “target image” (via SLaMP) acts as a necessary anchor for the optimization process.